AI

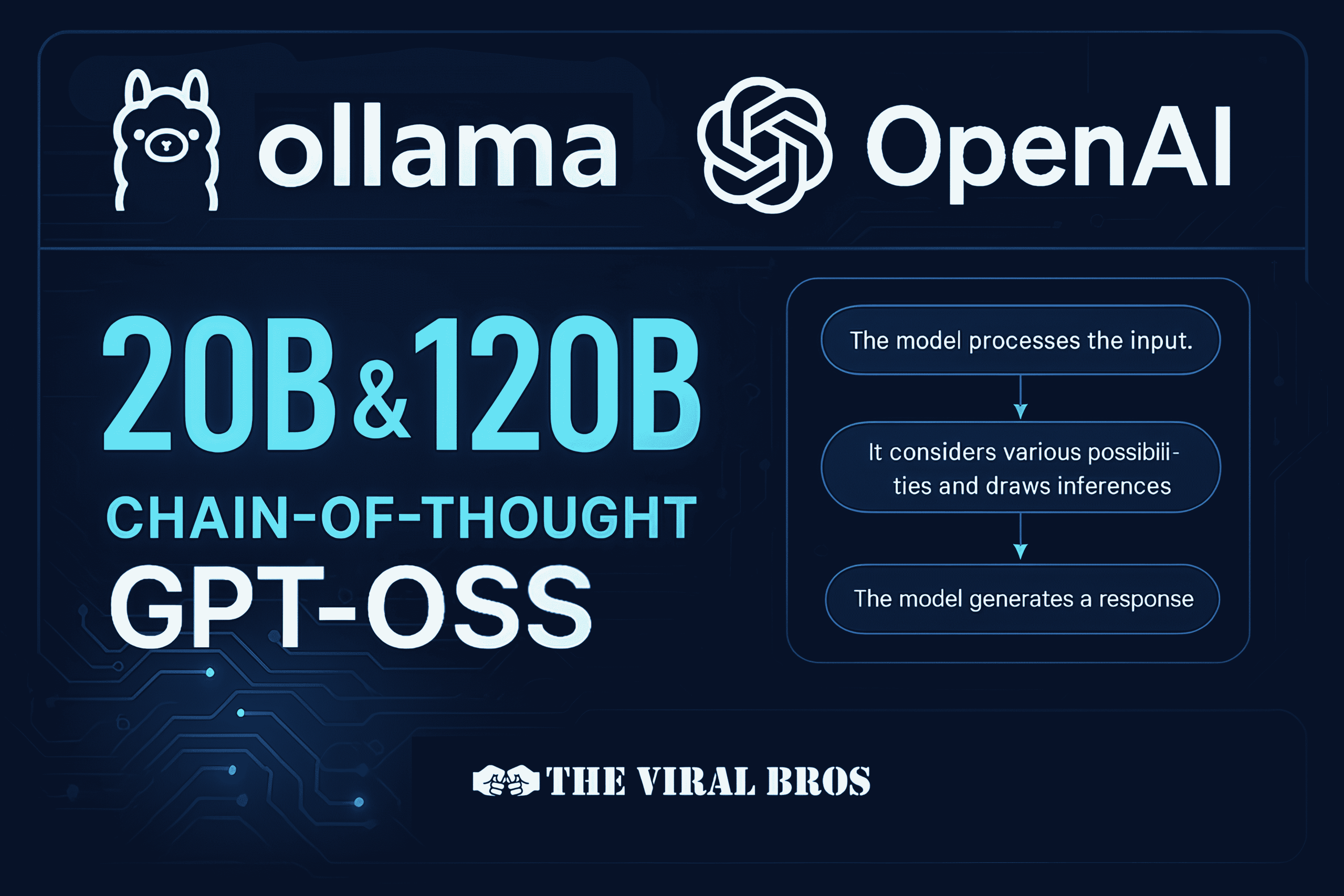

OpenAI’s GPT-OSS Just Landed in Ollama — And It’s Quietly About to Break AI Rules

GPT-OSS Ollama integration unlocks 20B & 120B models with reasoning, tool use, and full local control. Learn the twist most devs are missing.

Estimated reading time: 5 minutes

Something weird just happened in the AI world.

OpenAI — the same company famous for locking GPT-4 in a vault — just tossed the keys to the open-source crowd.

And somehow… Ollama walked away with both the 20B and 120B GPT-OSS models in its pocket.

Here’s the part almost nobody’s talking about:

This isn’t just “you can run GPT locally now.”

It’s a blueprint for private, offline, self-owned AI agents that think, plan, and act — no API calls, no subscription, no data leaks.

Let’s crack open why this is bigger than it looks.

🧠 GPT-OSS: More Than Just “Open Source”

These aren’t stripped-down student projects.

GPT-OSS 20B and 120B come with:

- Native chain-of-thought reasoning (you can literally see how it thinks)

- Tool use + function calling baked in — no hacking required

- Agent-friendly architecture for workflows beyond chat

Ollama didn’t just host them — they built a developer-ready pipeline so you can go from install to fully-functional agent in minutes.

🚀 Why Ollama x GPT-OSS Is a Game-Changer

1. Fully Offline on Local Machine: No Cloud, No Fees, No Limits.

Run it entirely offline by downloading Ollama’s new app on your own macOS and Windows machine. If you’ve got 16GB VRAM, you’re good for 20B. 120B just needs more horsepower.

2. Agent-First by Default

Ollama’s integration supports Python tools, structured outputs, local APIs, multi-step planning, and web integration for full AI agents right out of the box. Build AI assistants that act, not just answer.

3. Transparent Reasoning

Ever wanted to see why an AI gave an answer? Now you can — and you can even debug its “thought process” mid-flow. These models show their work — a rare feature in AI that boosts trust and usability.

4. A Different League from LLaMA or Mistral

While LLaMA and Mistral are strong, GPT-OSS comes pre-wired for multi-tool workflows, making it action-ready, tuned for structured reasoning and action chaining out of the box.

⚠️ The Trap Most Devs Are Falling Into

A lot of people are treating GPT-OSS in Ollama like a demo toy. Install, run “Hello World,” move on. If you install GPT-OSS and just “try a few prompts,” you’re leaving 90% of its value on the table.

That’s a waste. The magic happens when you:

Build private, persistent, multi-tool agents that:

- Keep their own long-term memory

- Automate real-world tasks

- Build privacy-first copilots, and operate without ever touching OpenAI’s servers.

- Stack multi-agent workflows

Once you see it as your own personal AI infrastructure, the game changes.

💡 5 Real & High-Impact Projects You Can Build Right Now

- 📄 Private research analyst — scans docs, summarizes, cites, and stores findings offline. An assistant that reasons out loud and cites everything

- 📅 Smart local scheduler — Connects with your local calendar and email—no cloud syncing—and books your meetings for you.

- 📚 Guided tutor — Guides students step-by-step through coding, math, and complex problems—while showing exactly how it reasons.

- 🔐 Knowledge vault — A fully offline, AI-searchable databse of everything you’ve ever read or written.

- 💬 On-device customer service bot — Perfect for businesses that can’t risk sending client data to the cloud.

🧨 The Hidden Message from OpenAI — and Why It Matters More Than You Think

OpenAI’s release quietly acknowledges something huge: the next AI wave is agentic and local-first.

And Ollama? They’re not just participating — they’re building the rails for it.

If you’ve been waiting for the “privacy + power” sweet spot in AI… this is it.

🧵 TL;DR

OpenAI gave us GPT-OSS. Ollama made it run locally like a dream. This isn’t about faster chat — it’s about building your own AI infrastructure with no fees, no cloud, and no middleman.

Learn more about OpenAI’s latest advances on their official website.

❓ FAQs

Everything You’re Curious About:

Yes. Once installed through Ollama, you can run it fully offline. Internet is only needed for initial download or if your tools require it (e.g., live web search).

120B delivers deeper reasoning and more context memory, but it’s heavier. 20B is still strong enough for most agentic workflows and runs on more modest setups.

You can — Ollama supports custom models. But fine-tuning 120B requires serious compute and storage. Parameter-efficient tuning methods (LoRA, QLoRA) make 20B fine-tuning realistic for hobbyists.

Since data never leaves your machine, you sidestep most cloud compliance risks. That said, responsibility for secure storage and access control is still yours.

In many cases, yes. GPT-OSS has stronger native reasoning and function-calling, making it ideal for coding tools, local copilots, and multi-step workflows.

No promises. GPT-OSS is a rare move from them. The AI community is watching closely to see if this was a one-time drop or the start of a trend.

Yes — Ollama lets you swap or chain models, so GPT-OSS can handle logic while another model handles style or language.

GPT-OSS is designed for action, not just text — function calling, structured output, and multi-tool orchestration come native.

Yes — and Ollama lets you log it, making it great for debugging or educational tools.

For many agentic tasks — yes. Especially when combined with local APIs, tools, and memory. But GPT-4 still wins in raw generalization.

OpenAI released GPT-OSS under a permissive license for research and commercial use, but check the repo for fine print.